Model Fairness

ML Fairness is the model’s quality or state of being fair or impartial, and it relates to the harm of allocation and quality of services.

What is Machine Learning Fairness?

“Fairness” is a very popular term in the artificial intelligence and machine learning landscape. It is defined as the quality or state of being fair or impartial.

AI systems show unfair behavior for several reasons, including societal bias reflected in the training data – sometimes, too few data points about a specific subpopulation contribute to unfair decisions by AI systems.

To understand unfair behavior shown by ML models, we need to define it by its harm on users and not causes - prejudice or societal bias. Unfair AI systems can contribute to different harms, including:

- Harm of allocation: An AI system impacts resources, opportunities, and information related to subpopulations. E.g., school admissions, recruitment, and lending, where a model performs better to pick the candidates among a specific group of people than among other groups.

- Harm of quality-of-service: An AI system fails to perform equally for two specific groups by serving better to one group than the other one. E.g., a voice recognition system might fail to serve women as satisfactorily as it serves men.

Although any discrimination towards a sub-population is unintentional, checking for AI solutions’ fairness is imperative. Some of the ML fairness tools include:

Why Is Machine Learning Fairness Important?

ML models develop a bias in their functioning due to human bias and/or historical bias in the training dataset. Models learn from such biased datasets and can make unfair decisions. These biased decisions affect the inputs of cascading models connected to serve a single purpose. In this way, bias keeps on propagating and increasing in ML systems.

If unchecked, unfair AI systems can create long-lasting losses to businesses and add negative business value to the organization leading to scenarios such as customer attrition, damaged reputation, and reduced transparency.

For these reasons, ML fairness is critical for modern businesses. It fosters consumer trust and helps demonstrate to consumers that their concerns matter. It also helps ensure compliance with rules and regulations set up by governments.

Machine Learning Fairness: The Way Ahead

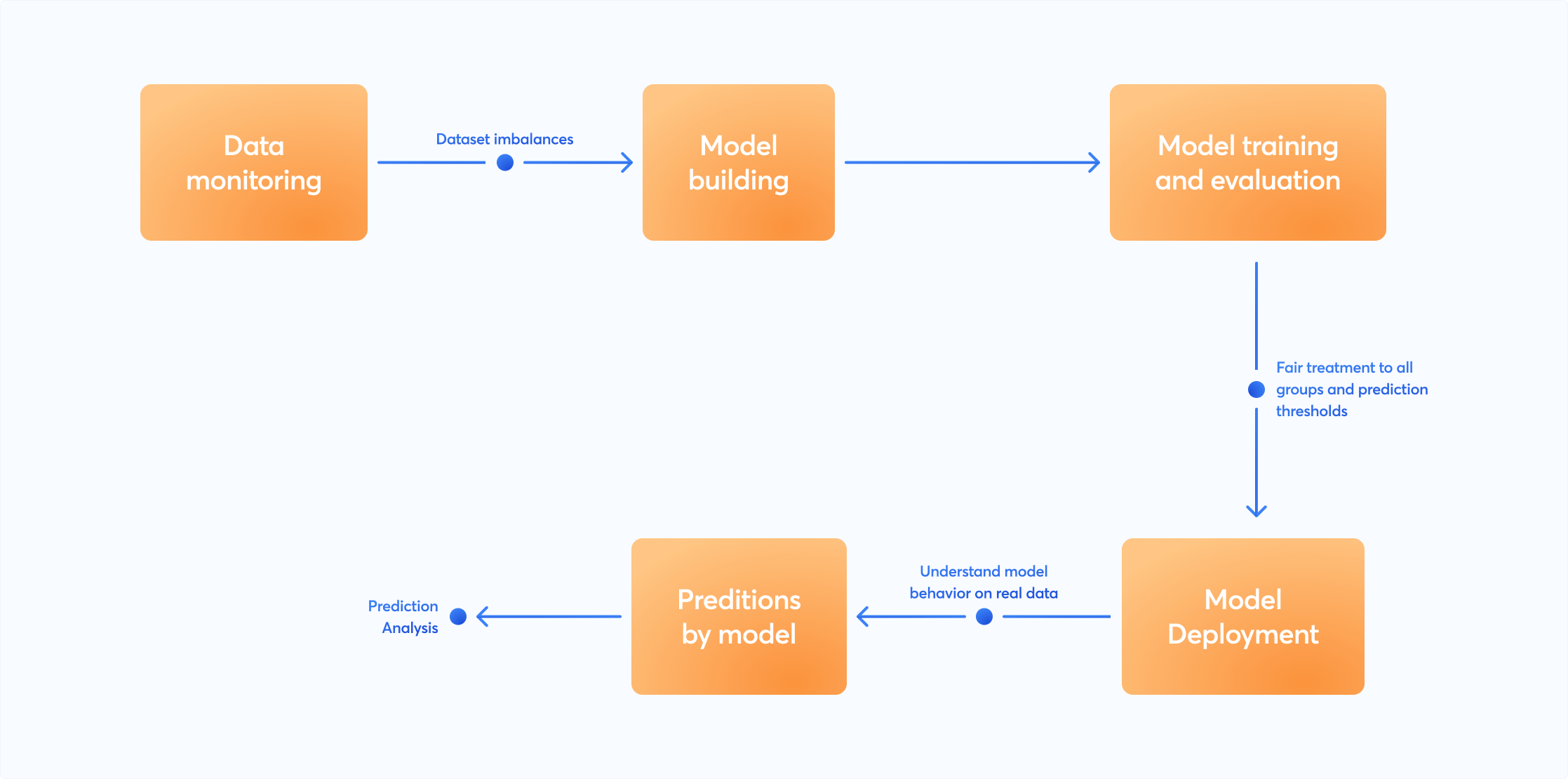

One of the best practices to avoid bias is combining fairness analysis throughout the ML process instead of working on it as a separate initiative. Reevaluating models from a fairness perspective involves steps like finding dataset imbalances, setting prediction thresholds, understanding prod model behavior, and performing prediction analysis.

Modern AI companies leverage quantitative AI fairness tools like Fairlearn to mitigate the unfairness of ML models. Fairlearn is an open-source toolkit that helps assess and improve ML model fairness. Such tools empower ML professionals with a visualization dashboard and set of algorithms to mitigate ML bias.

Document Resources:

- NeurIPS 2017 keynote by Kate Crawford

- Building ML models for everyone: understanding fairness in machine learning

- What does “fairness” mean for machine learning systems?

- What Is Fairness and Model Explainability for Machine Learning Predictions?

- Fairness and Ethics in Artificial Intelligence!

- Evaluating Machine Learning Models Fairness and Bias.